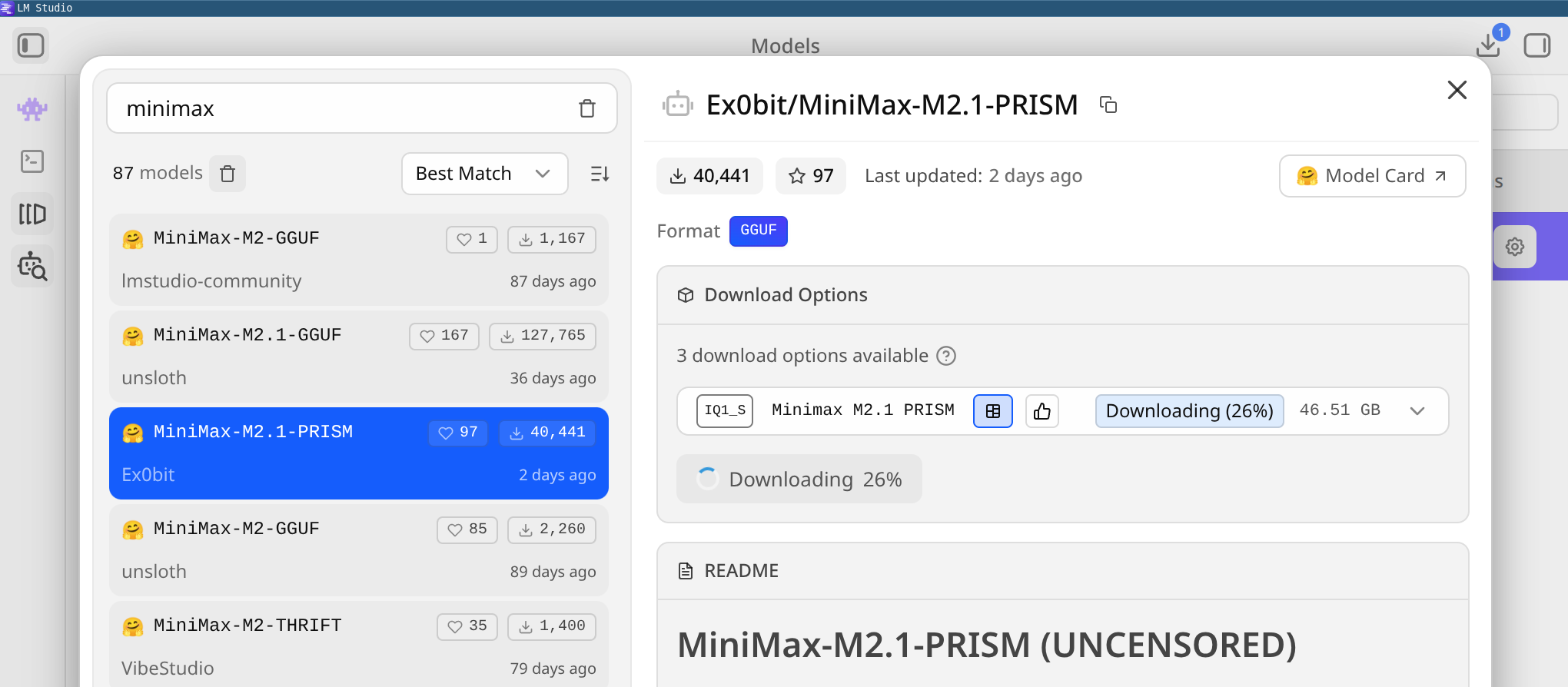

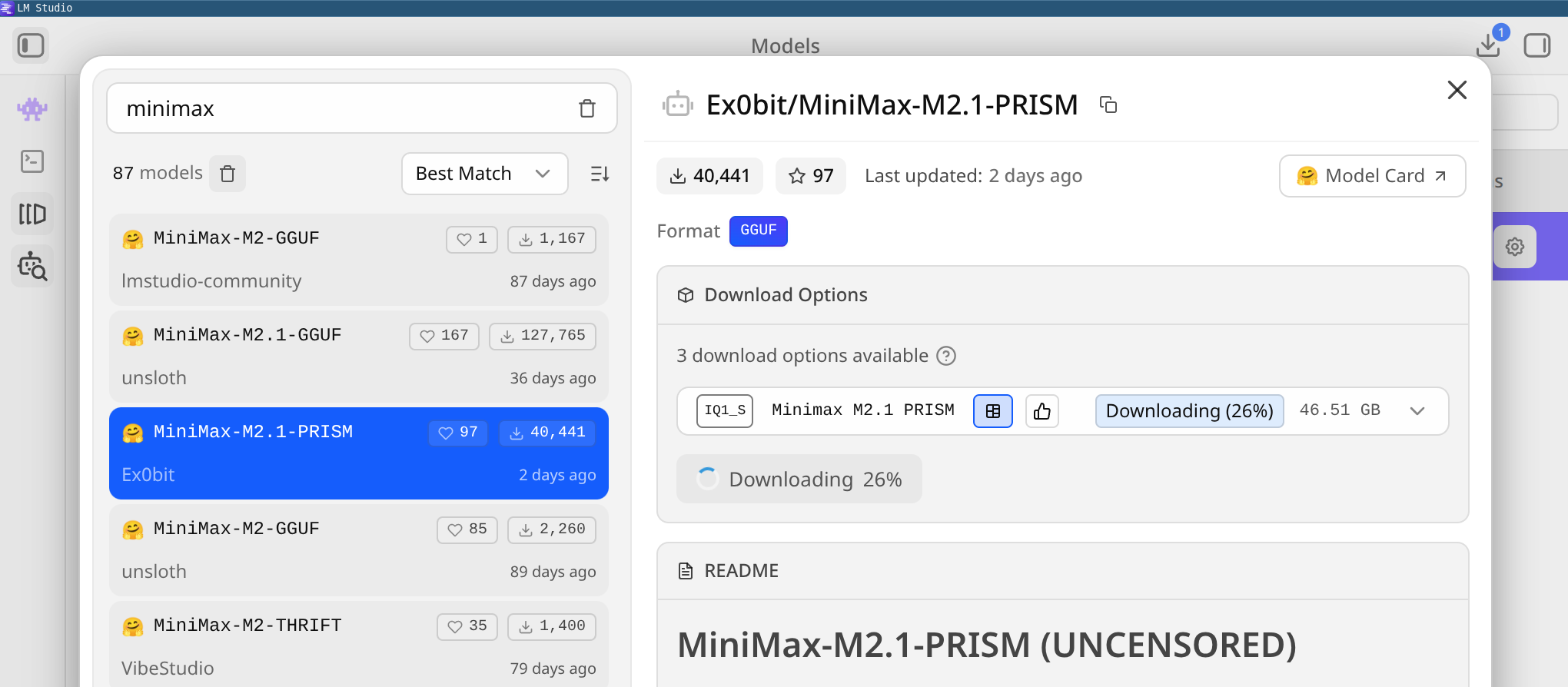

If you use IntelliJ, the Continue plugin can connect to a local Ollama instance. Openclaw says it supports MiniMax M2.1, and there are docs about how to use it from LM Studio, but the onboarding wizard doesn't seem to have an LM Studio option :bunthinking: