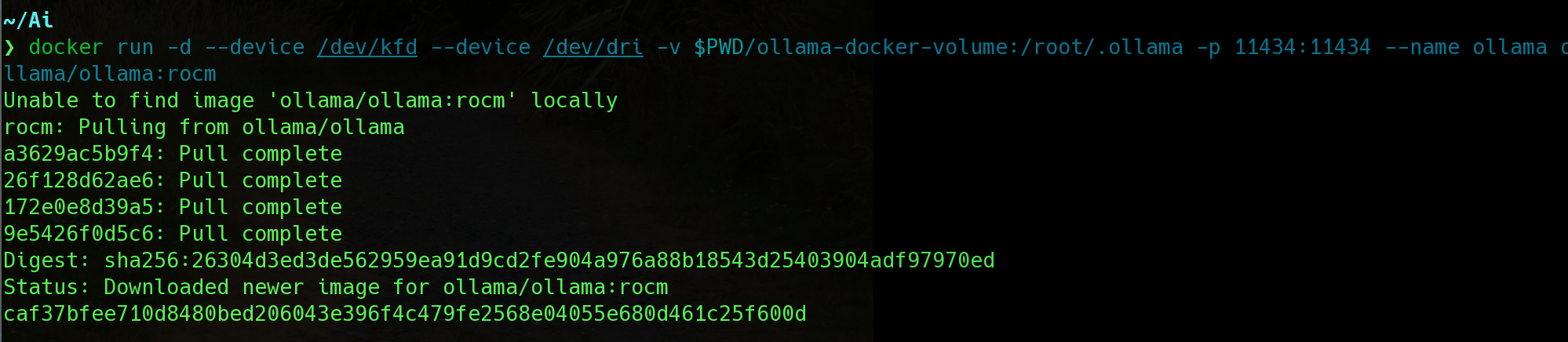

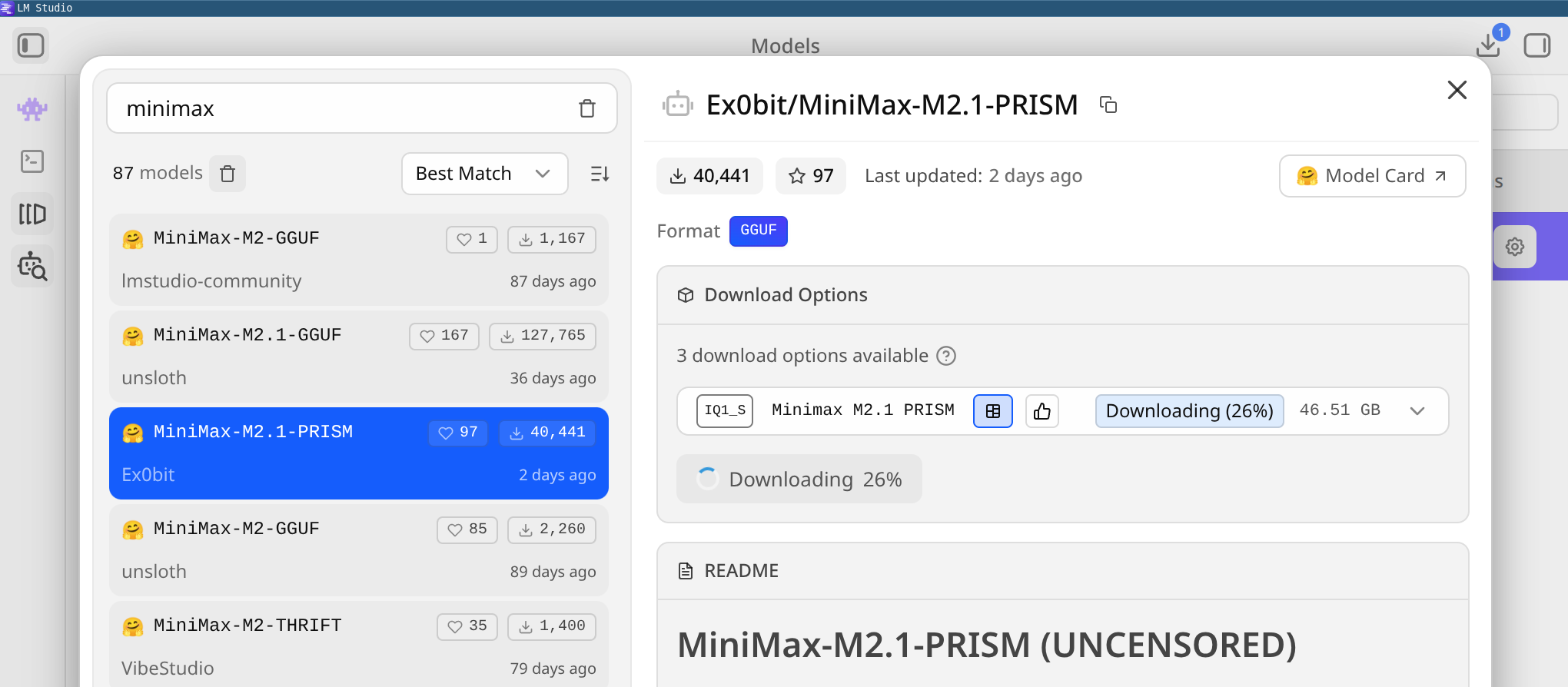

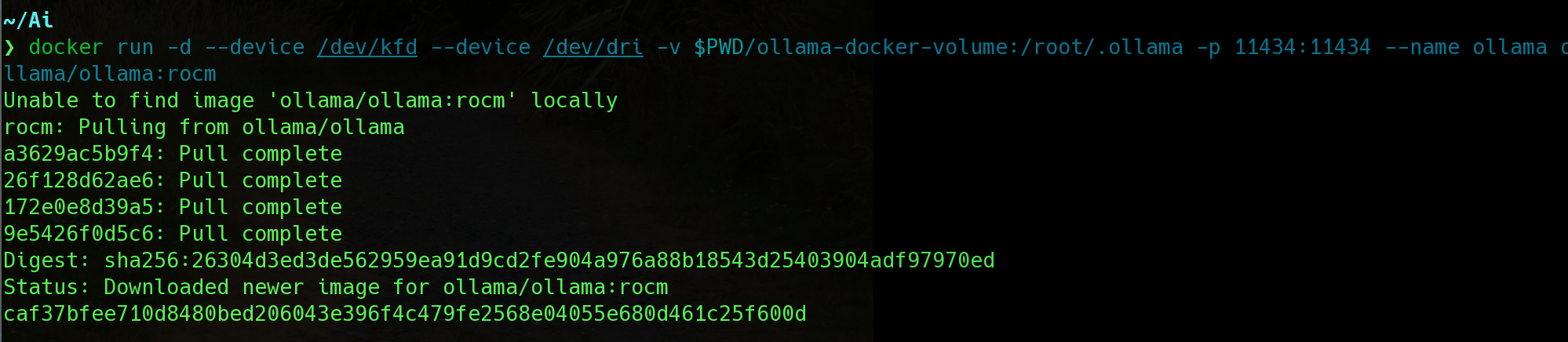

Alright, well I might as well give this a shot. I wonder if I can get my agent banned with the right system prompt. ... I haven't used Ollama in forever. What local models are people using these days? I've got 32GB of vram.

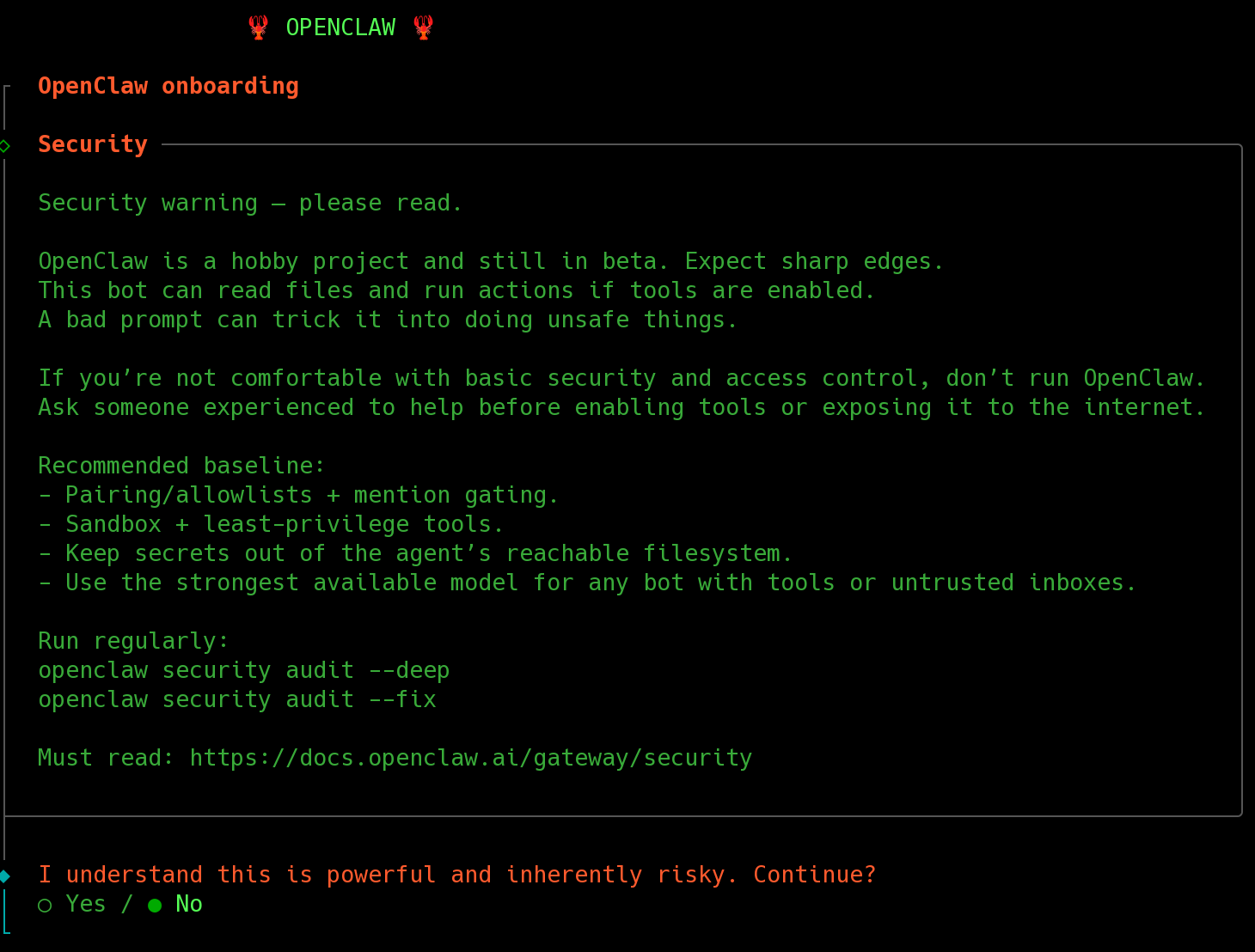

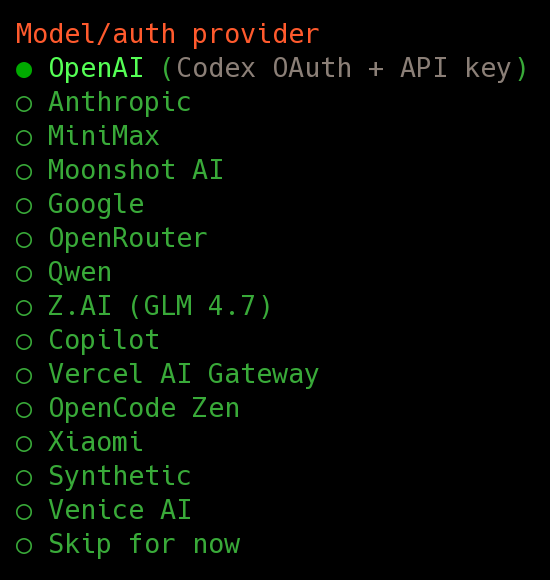

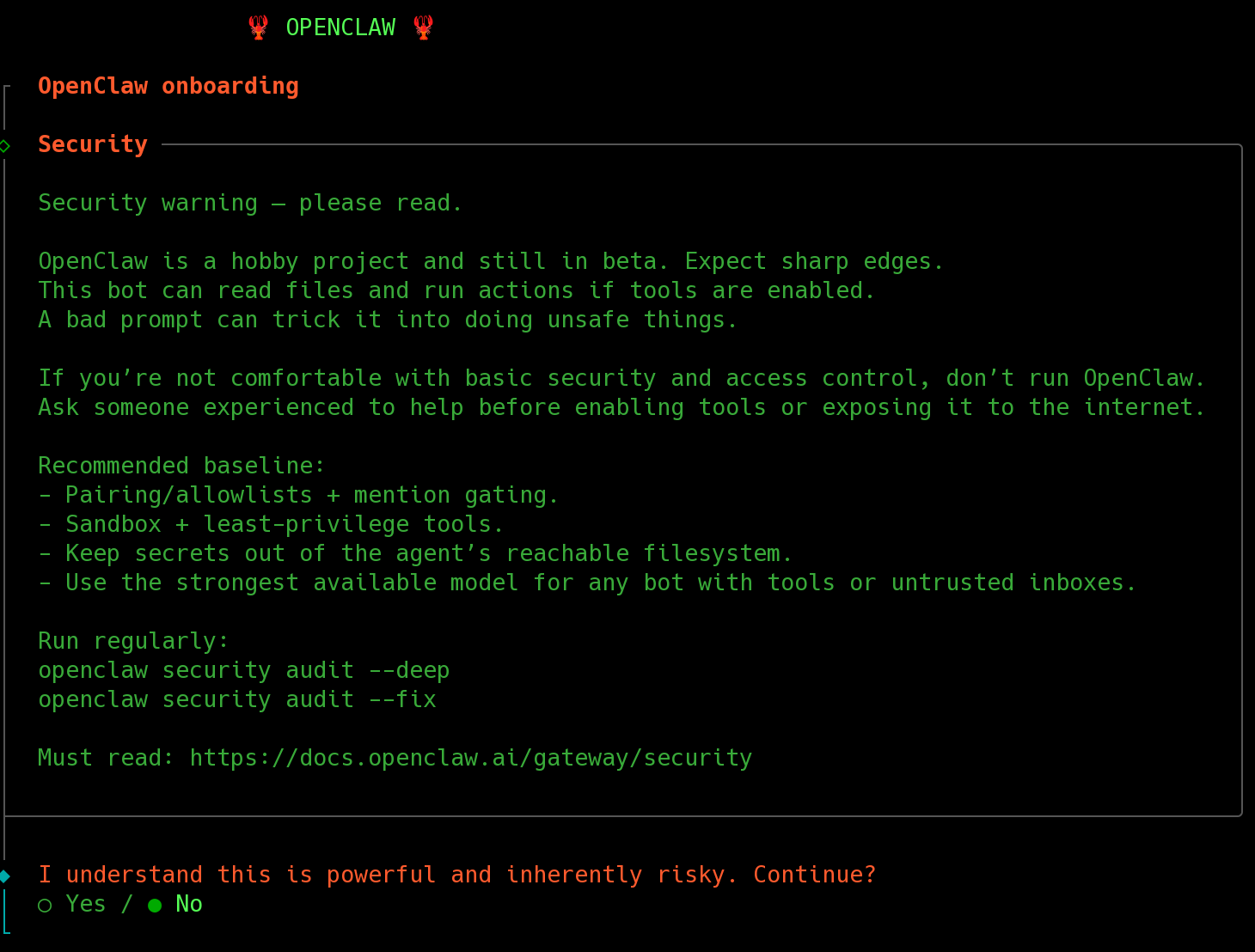

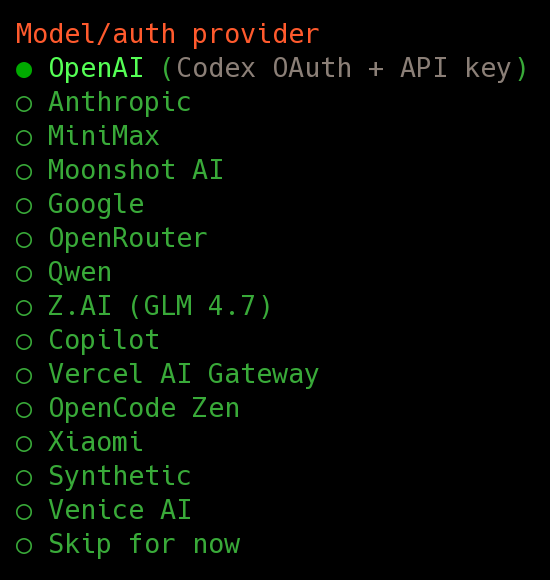

oh god ... well Openclaw looks terrifying ... and no Ollama support ... not sure if it supports local models at all! Don't want it blowing through my Github tokens that I get from work either ... :bunthinking: